Table of Contents

- Measuring Your Data Quality Gap

- How does Azure natively handle data quality issues?

- Azure data quality tools you should use

- Closing the Data Quality Gap with Data Governance and Management

- How to create an Azure data quality framework

- Enforce Data Governance Standards with Master Data Management

- Maximize the Return on Your Azure Investment with Trusted Data

In this four-part blog series, we explore the critical, complementary disciplines of data governance and master data management (MDM). While these principles hold true for any deployment, here we focus on the Azure cloud.

This is Part 2 in the series. Be sure to read Part 1 on whether you can trust your data in Azure, Part 3 on how MDM can unleash the power of your governance program and Part 4 on why Microsoft Purview and Profisee MDM are the clear choice for governance and MDM in Azure.

Organizations often invest in a cloud migration as part of a broader IT and business objective, including undergoing a digital transformation, developing a business intelligence (BI) or analytics program, increasing operational efficiency or meeting compliance requirements.

And Microsoft’s Azure cloud-computing stack is an attractive option with dedicated applications and services for data governance (Microsoft Purview), analytics (Azure Synapse) and data integration (Azure Data Factory).

But once data is combined from multiple source systems and databases in Azure, organizations often find that their enterprise data is of poor quality, missing, incomplete, duplicated or more complicated than they expected.

You may have identified the symptoms of poor data quality and have felt the impacts within your organization. But you may not know that poor data quality — and lack of trusted data in general — can be the biggest risk to your Azure investment.

And when it comes to poor data quality, you are not alone. In fact, 60% of organizations reported under-investing in their enterprise-wide data strategy, preventing valuable data from being broadly used, according to a 2021 survey by Harvard Business Review Analytic Services.

Harvard Business Review on the Path to Trustworthy Data

say having a strong MDM is important to ensuring their future success.

believe their organizations are under-investing in their enterprise-wide data strategy.

say their organizations rely on more than 6 data types that are essential to business operations.

who have employed MDM say their organizations’ approach to MDM is moderately or very effective.

According to a report from McKinsey, poor data quality currently costs companies around 15-25% of their revenue annually, and by 2025, most enterprise data strategies will focus on improving data quality to extract more value from data-driven initiatives.

But there are proven steps you can take to improve data quality throughout your organization.

Read on to learn how you can define your business objectives and identify relevant data sources to measure the quality gap in your data and then use data governance and master data management to close the gap and build a strong foundation of trusted data.

Measuring Your Data Quality Gap

When identifying and remediating data quality issues, it is often helpful to start with the end in mind. First, define your objective.

Common examples of enterprise IT initiatives include:

- Digital transformation: reimagining your business for the digital age by using technology to create new or modify existing business process

- Business Intelligence (BI) or analytics: implementing data management tools to understand business data and derive insights to drive the business

- Increasing operational efficiency: increasing the ratio of your business output (e.g., manufacturing volumes, revenue, customer engagements) to your resource inputs (e.g., capital, person-hours, raw materials) — in other words, anything to make the business more efficient!

- Maintaining compliance: the ability to act according to a market demand or regulatory requirement by implementing business processes and safeguards. Common examples include privacy and the safeguarding of sensitive information.

In today’s digital age, nearly all business objectives have enterprise data at their core. And your ability to accomplish these objectives is a function of the quality of that data across your organization. Even taking advantage of advanced technologies like machine learning and artificial intelligence requires data that is consistent and complete if they are to make the inferences and deliver the insights you are seeking.

Mapping Business Initiatives to Data Types or Domains

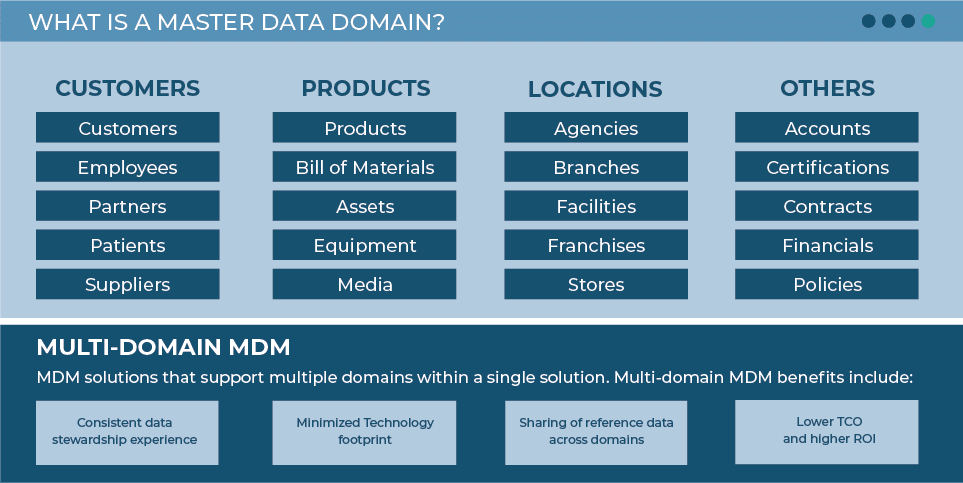

To better define your data needs for your specific objective, it is helpful to look at the data type, or domain, involved in the objective — bearing in mind there will usually be multiple:

- Customer data: a full 360-degree customer view of all personal, behavioral and demographic data across sales, marketing and customer service interactions. Multiple variations on ‘customer’ data are people and businesses, as well as individuals and households. In different industries such as healthcare, a similar (but not the same) model might be used for patients and providers (doctors, nurses, etc.)

- Product data: can vary significantly across industries and can include name, description, SKU, price, dimensions and materials as well as relevant taxonomies, hierarchies, relationships and metadata. Note that widely different sets of attributes may be required for different product families (i.e., shoes and shovels require different sets of attributes to be properly identified)

- Location data: includes geospatial data like latitude-longitude, city, state or region as well as business-specific information like line of business (LOB), sales region, services provided and number of employees based

- Reference data: can take many forms, but are the ‘simple’ lookup tables included in many applications across the enterprise — from the approved list of state codes, to sales regions, to cost center hierarchies to standard diagnosis codes in healthcare. These often seem so ‘simple’ that can be overlooked but can cause issues when they are inconsistent across systems

- Other: all other data types, including account certifications, contracts, financials, policies, etc.

| Primary Data Type / Domain | Examples by Organization | Sample Attributes |

| Customer | Customers Employees Partners Patients Suppliers | Demographics Purchase History Contact information Preferences Certifications |

| Product | Products Bill of Materials Assets Equipment Media | SKU Price Dimensions Materials Taxonomies/Relationships |

| Location | Agencies Branches Facilities Franchises Stores | Latitude & Longitude Address City, State, County Sales Region Line of Business |

| Reference Data | Standard codes Cost center hierarchies Lookup tables Dimension tables | |

| Other | Accounts Certifications Contracts Financials Policies | Account Approval Status Expiration Date Parties Regulatory Authorization |

From here, you can begin mapping your primary business objective with the necessary data domains.

For example, to improve customer service you may need to understand which products are most frequently purchased and factor that into your customer service training. Or to effectively cross-sell and up-sell to existing accounts, you may need to understand whether customers more often start with a checking account or mortgage loan and build your targeted marketing campaigns around the products they have the highest propensity to buy.

What the above examples illustrate is the need for a multidomain data quality strategy. Indeed, they both involved data from multiple domains because no true business problem can be solved with only a single myopic view of the underlying data.

But don’t get discouraged. While all the domains interact with one another, it is still critical to prioritize your data and break up your data management initiative into phases. Just like the adage about “boiling the ocean,” your project charter shouldn’t be to “govern and manage all company data.”

The data discussed above is master data, the core, non-transactional data used across your enterprise. Unlike transactions that may be logged by your business in the order of millions of times per day, master data is relatively slow-moving.

But while master data is smaller in volume than transactional data, it is almost always higher in complexity. So it is critical to review both the data itself and the relationships between data types. For example, a clothing retailer may sell dozens of types of running shoes throughout multiple regions that each can be configured in different sizes and colors.

More than just a dozen shoe products, you have myriad relationships among their attributes. You need to consider and define these complicated relationships and relevant metadata to design, execute and enforce a robust data governance program.

Scan and Collate Your Master Data by Source System

It isn’t uncommon for an enterprise to have their customer, product, location and other data spread across disparate systems. Perhaps you keep records of customer purchases and how they interact with your marketing efforts in your CRM while billing and payment terms are stored in a different system.

Each system then holds a duplicate record of a contact or account, and the records likely have both overlap and discrepancies. For example, you may have customer names or their organization listed differently across systems (e.g., ‘Crete Carrier Corp’ in your CRM and ‘Crete Carrier Corporation’ in your ERP), preventing records from being matched in your analytics platform.

Scale these duplicates and inconsistencies across systems, regions and departments, and enterprise data can quickly become more siloed, duplicate and inaccurate — a shaky foundation for any business initiative! Suddenly, answering simple questions about a customer’s payment history, propensity to purchase another product or customer service interactions require consulting a half-dozen systems or database administrators.

This example illustrates the importance of identifying and scanning source systems for types, or domains, of data and determining which systems contain the most reliable data for your most critical data types and which can potentially be helpful secondary sources to fill in gaps.

How does Azure natively handle data quality issues?

Azure offers several built-in tools and services to address data quality challenges, helping organizations maintain accurate, consistent and reliable data. One of the primary ways Azure supports data quality is through Azure Data Factory (ADF), which provides data integration capabilities that can detect and correct anomalies. ADF enables the transformation of raw data into structured formats, ensuring that data flows across systems remain clean and usable.

In addition, Microsoft Purview, a unified data governance solution, plays a critical role in data quality by offering automated data discovery and lineage tracking. This helps to identify data inconsistencies, duplications and incomplete records. Purview allows organizations to establish rules and standards for data entry, ensuring that information complies with predefined quality requirements.

Azure Synapse Analytics further enhances data quality by enabling the ingestion of massive amounts of structured and unstructured data while applying rules for validation and enrichment. This ensures that data processed through Synapse meets quality thresholds before it’s used for analytics.

Together, these native Azure services create an environment where data quality is proactively managed and maintained, but for more complex and comprehensive data quality needs, organizations often turn to third-party solutions like Profisee’s master data management (MDM) platform to fill gaps and ensure that data quality meets enterprise-wide standards.

Azure data quality tools you should use

In addition to the native tools offered by Azure, organizations often leverage complementary solutions to address more complex data quality challenges. These tools work alongside Azure to help ensure your data remains accurate, consistent, and fit for purpose.

One powerful option is data quality management tools that focus on detecting and correcting data errors, validating data against predefined rules, and standardizing formats across systems. These tools ensure your data meets internal quality standards before it is integrated into analytical or operational systems.

Data catalogs are another essential tool for enhancing data quality in an Azure environment. They provide metadata management and help track data lineage, allowing users to better understand where data originates, how it’s transformed, and who is using it. A data catalog enables users to discover and assess the quality of data sources before incorporating them into workflows, reducing errors caused by using outdated or incorrect information.

For organizations managing large-scale data integrations, ETL (Extract, Transform, Load) tools can significantly boost data quality. ETL tools are designed to cleanse, transform, and enrich data as it moves between systems. By embedding quality checks and validation steps within your ETL processes, you can catch and correct issues before the data reaches downstream systems.

Finally, master data management (MDM) solutions are critical when managing data across multiple domains, such as customers, products, or suppliers. MDM tools help ensure that core data is accurate, de-duplicated, and synchronized across various applications. By integrating MDM with Azure, you can establish a single source of truth for your most critical, widely shared data, driving higher data quality and improving trust in your organization’s insights.

Using these advanced tools alongside Azure helps close the gaps that native solutions may not address, providing a comprehensive approach to maintaining high data quality.

Closing the Data Quality Gap with Data Governance and Management

Now that you have identified your critical data types, organized them by domain and determined which source systems house the records of that data, it is time to begin the work of data governance.

Though governance technically does not require a dedicated technology platform, data governance solutions like Microsoft Purview have made it easier for organizations to scale their governance efforts and leverage their accumulated data to support trusted analytics and new or improved business processes.

Establish Business Definitions, Determine Lineage and Create Data Quality Rules

Whether employing a dedicated governance application or simply using a data governance framework, you need to first define your data types and sources, specify your data quality requirements, assign ownership, measure effectiveness and ultimately enforce data standards.

At a high level, data governance often includes:

- Resolving definitional conflicts between sources for each data type

- Recording any subsequent technical and business definitions, lineage and mapping or transformation rules required

- Creating and documenting data quality rules

- Adjusting the data model to reflect these governance rules.

| Data Governance Tactic | Example | Business Impact |

| Resolving definitional conflicts | CRM refers to B2B re-sellers as a ‘partner,’ but financial reporting considers them ‘customer | Holistically measure revenue across channels |

| Defining and logging rules in business glossary | Define ‘region’ as one of six delineated U.S. sales territories | Business glossary serves as ‘single source of truth’ to begin rectifying data across sources |

| Creating and documenting data quality rules | CRM has no minimum character requirement and allows special characters for ‘business name’ while ERP requires 25 characters and only supports plain text | Ensure consistent, complete data throughout the enterprise through formal rules and validation mechanisms |

| Adjust the data model to reflect governance rules | Marketing department does not realize the address field is separate from the ordering/shipping address | Data governance framework is visible to all stakeholders |

While data governance as a strategic framework can help organizations best define how to manage their data, they still need to enforce these governance standards. Indeed, the previous examples only constitute the “policy and creation and management” phase of data governance — executing those policies then monitoring their effectiveness is where IT leaders can truly demonstrate the value of their governance initiatives and unleash the value of their Azure investment.

How to create an Azure data quality framework

A survey by Gartner found that 59% of organizations do not measure data quality, making it challenging to track and improve their processes.

Building a robust data quality framework within Azure is crucial to ensuring that your organization’s data is accurate, reliable and valuable for decision-making. A strong framework incorporates best practices across multiple dimensions of data quality, and each step is essential for preventing data issues before they impact your business.

Here’s how to create an effective Azure data quality framework:

Define clear data quality objectives

Start by identifying your organization’s specific data quality needs and goals. Consider what constitutes “high-quality” data for your use cases — whether that means accuracy, completeness, consistency or timeliness. By aligning your objectives with business outcomes, you can tailor your data quality framework to address the most important challenges.Establish data quality dimensions

Successful data quality management requires focusing on several key data quality dimensions, such as:- Accuracy: Ensure that data correctly reflects real-world entities or events.

- Completeness: Identify and fill missing values to maintain data integrity.

- Consistency: Standardize data across different sources and systems to avoid conflicting information.

- Timeliness: Keep data up to date and relevant by integrating real-time or near-real-time data pipelines where needed. These dimensions should guide the creation of data quality rules and validation checks within your Azure environment.

Implement governance policies

Data governance plays a pivotal role in maintaining data quality. Within Azure, you can leverage Microsoft Purview or third-party Azure data quality tools to define and enforce governance policies, such as data ownership, access controls and data stewardship responsibilities. By clearly defining who is responsible for data quality at different stages, you can prevent quality issues from arising due to improper handling or lack of accountability.Integrate data profiling and monitoring

Data profiling and monitoring are essential for continuously assessing the quality of your data. Use Azure data quality tools that allow you to analyze data for patterns, identify potential anomalies, and set up automated alerts for when data falls below acceptable thresholds. This ensures that any issues are detected early and can be addressed before they propagate through the system.Leverage automation

Automation is key to maintaining high data quality at scale. By incorporating automation into your Azure data quality framework, such as through ETL processes or data quality management tools, you can streamline tasks like data cleansing, validation, and transformation. Automated workflows help enforce data quality rules consistently and reduce the likelihood of human error.Integrate Master Data Management (MDM)

MDM plays a critical role in a comprehensive data quality framework. An MDM solution allows you to centralize and standardize core business data, such as customer or product information, ensuring consistency and accuracy across the enterprise. Integrating MDM with your Azure data ecosystem creates a trusted source of high-quality data for all your business operations.Continuously refine and adapt

Data quality is not a one-time effort. Continuously monitor, review and adjust your framework as business needs evolve and new data sources or technologies are introduced. Use feedback from data stewards and stakeholders to improve data quality processes and governance policies over time.

By following these steps, you can create a dynamic and sustainable data quality framework within Azure, ensuring that your data meets the high standards necessary for driving business success.

Enforce Data Governance Standards with Master Data Management

Now that you have a formal data governance program in place — whether through a dedicated application or as a documented framework — you cannot let your hard work go to waste! Your rules, definitions and data modeling must be enforced on the data to ensure it is accurate, trusted and available across the enterprise.

You have already established through your building and implementing your data governance program that various source systems, departments and data types each have unique data quality and validation requirements, i.e., what is good for a CRM may not suffice in an ERP.

So rather than hiring data stewards to continually reconcile and correct data on an ad-hoc basis or try to enforce data standards upon entry in the source system, holistically manage your enterprise data with master data management (MDM).

MDM is a hub for matching and merging records and implementing data quality standards for undeniable trust. And much like a dedicated governance application can make your efforts much easier, you need an MDM platform that can be quickly implemented with a native Azure integration, is multidomain, and scalable across your organization.

MDM can handle data from multiple source systems both operating in Azure and from external sources, including ERPs, CRMs, custom applications, cloud applications, legacy apps and more. Scanning these data sources with Purview can build a catalog of what data is available from each source, and applying MDM to analyze common data domains can quickly identify data quality issues like missing, conflicting, incomplete, duplicated, and outdated information.

MDM corrects these problems by uniformly enforcing data quality standards —matching, merging, validating and correcting your data — then syncing it back to sources.

MDM also excels in multi-domain use cases like combining householding, product and contract information for risk-based pricing in finance, or coupling provider, patient and treatment data in healthcare. In fact, almost all real-world business use cases rely on data from more than one master data domain.

Business outcomes depend on multiple domains, and multi-domain MDM handles domains for any use case. Customer domain data must be combined with product data to properly identify cross-selling and upselling opportunities. In manufacturing, you need data about items, planners, and facilities for strategic procurement. The more cross-domain utility you get from MDM, the greater your ROI.

Maximize the Return on Your Azure Investment with Trusted Data

The single source of truth that MDM can provide is the best path for high-quality, trusted data in Azure because of its multi-source, multi-domain design.

Don’t let poor-quality data derail your diverse Azure ecosystem. Use MDM to complement your data governance program and work with your existing systems and databases to effectively manage your data.

To learn more about how MDM can seamlessly complement your Azure investment, explore the Profisee platform see how it seamlessly integrates into Azure to deliver high-quality, trusted data across the Azure data estate.

Ready to see MDM in action? Schedule a live demo to see how the Profisee MDM platform can solve your unique business challenges.

For the full story and technical details, download The Complete Guide Data Governance and Master Data Management in Azure.

Forrest Brown

Forrest Brown is the Content Marketing Manager at Profisee and has been writing about B2B tech for eight years, spanning software categories like project management, enterprise resource planning (ERP) and now master data management (MDM). When he's not at work, Forrest enjoys playing music, writing and exploring the Atlanta food scene.